I had an interesting request from a Data Scientist in our team recently. He’d been extracting some data from one of our tabular models, however he was having some trouble getting his predictive model working reliably.

We already had a query in the form similar to the following where we had a couple of group by columns, one or two filters and a handful of measures:

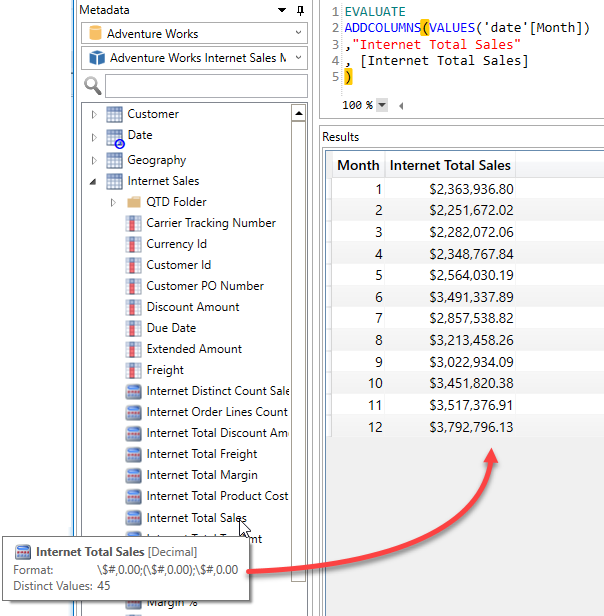

EVALUATE

SUMMARIZECOLUMNS(

'Table1'[Column1],

'Table2'[Column2],

FILTER(ALL('Table1'[Column3]), 'Table1'[Column3] = "Value",

"Measure1", [Measure1],

"Measure2", [Measure2]

)

Up until this point we had been manually adding measures that we though may influence the behaviour we were trying to predict, but this was a slow, trial and error based process. So the Data Scientist rang me and said “You know what? Why don’t you just give me an extract with all the measures?”. “You do realise we have over 1,000 measures” I said, “because we have lots of time intelligence variations like Current Month, Previous Month, Month over Month variance, etc.” and . “That’s fine he replied, I can always ignore any that I don’t want or that are not significant – you can just do something like a SELECT * right?”.

So if you’ve ever written your own DAX queries you would know that you can do a query like the following to get all the columns in a single table

EVALUATE 'Table'

But that will not get you any measures, you have to list out the measures manually one, by one. At this point I knew that I really did not want to spend hours to hand type a query with over 1,000 measures so I starting thinking what options I might have for generating this query.

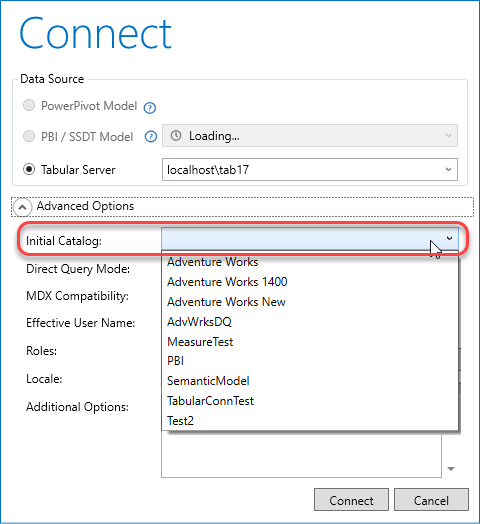

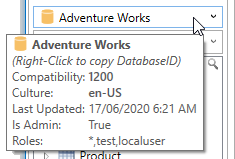

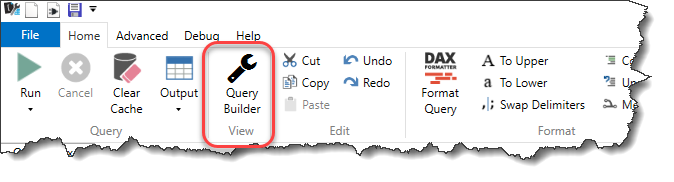

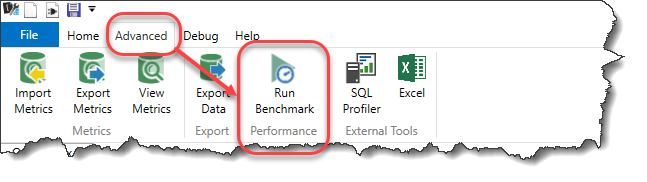

I knew I could probably build some sort of foreach loop in Powershell using AMO/TOM. Or I could maybe use the Advanced Scripting in Tabular Editor. But I also knew that I could easily get a list of all the visible measures by querying the $SYSTEM.TMSCHEMA_MEASURES or $SYSTEM.MDSCHEMA_MEASURES DMV’s using DAX Studio

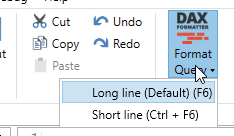

After a bit of experimenting I ended up with the following expression which builds a list of all the visible measures in the model in the "Name", Expression format that is needed for SUMMARIZECOLUMNS

SELECT '"' + [Name] + '", ' as [Caption], '[' + [Name] + '],' as [Name]

FROM $SYSTEM.TMSCHEMA_MEASURES

WHERE NOT [IsHidden]

ORDER BY [Name]

Then I was simply able to paste in the output from this query after the filters in our existing query and run it – Job done.

Recent Comments